When it comes to cloud migrations, not every scenario plays by the book. Our recent migration of a Kentico project to Azure was supposed to be straightforward—until we were handed a .bak file instead of the expected .bacpac. This blog post unpacks the unique challenges we faced and provides a detailed walkthrough of our solution.

Note: You might wonder why we didn’t simply restore the database directly on our Azure server using SSMS (SQL Server Management Studio). It’s important to understand that Azure SQL Server does not support restoring from a .bak file directly. Instead, Azure requires the use of .bacpac files for database imports. This distinction is crucial for planning migrations and requires alternative strategies when traditional backups are not feasible.

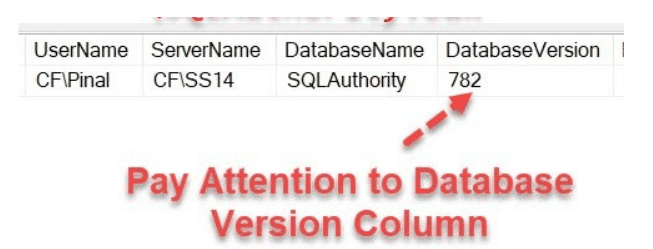

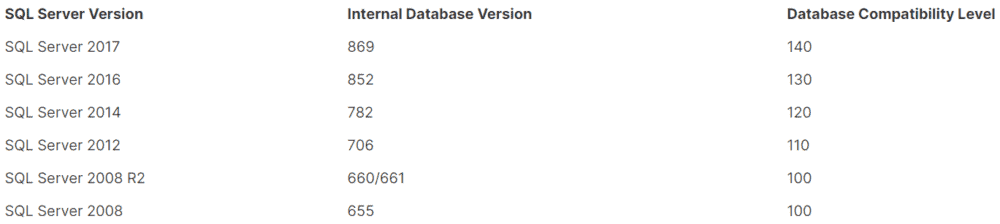

Before diving into the complexities of migration, it’s crucial to understand the environment we’re dealing with. One of the first hurdles we encountered involved identifying the SQL Server version of the .bak file provided, as mismatched versions between the source and target can complicate restorations.

Typically, this information isn’t accessible without restoring the database, which can be time-consuming and resource-intensive. However, there’s a more efficient approach: using a SQL query to extract this information directly from the .bak file. By running the following SQL command, we can peek into the .bak file to retrieve essential details about the SQL Server environment without the need for a full restore:

RESTORE HEADERONLY FROM DISK = 'C:\PathToYourBackup\YourBackupFile.bak'

Replace ‘C:\PathToYourBackup\YourBackupFile.bak’ with the actual path to your .bak file. This query provides a snapshot of the database’s metadata, including the version, which is instrumental in planning a smooth migration.

Once you have determined the SQL Server version of your .bak file, you may encounter scenarios where it is incompatible with all your available SQL Server versions. If this happens, consider the following options:

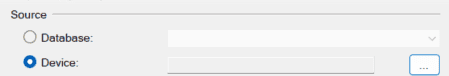

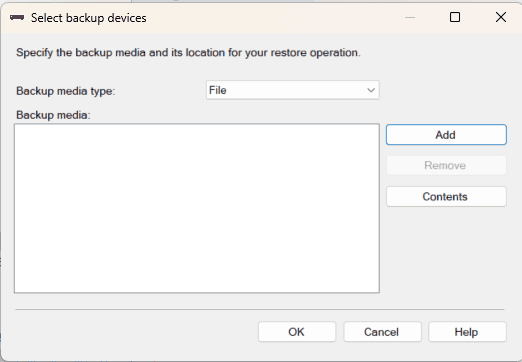

If your .bak file is compatible with your target SQL Server version, the next step is to restore the database. This process can seem daunting, but with the right commands and a careful approach, it can be executed smoothly. Here’s a step-by-step guide to help you restore your database from a .bak file:

This process will transfer the data from the .bak file back into a live SQL Server environment, effectively recreating the original database structure and contents on your target server.

Due to the substantial size of certain tables within our database, a direct export to a .bacpac file was not feasible, as it would routinely cause timeouts. To circumvent this issue, we opted to individually extract these large tables using the Bulk Copy Program (bcp). This method allows us to offload the data efficiently and handle it separately, which is crucial for managing the database size during migration.

Important: Before proceeding with the BCP process, ensure that any foreign key constraints related to these large tables have been properly handled. Refer to the “Handling Foreign Key Constraints During Large Table Exports” section further in this article for detailed instructions on how to drop and reapply these constraints to avoid complications during the migration process.

For example, to export a large table, you can use the following bcp command in an administrative command shell:

bcp dbo.YourTableName out "<YourFilePath>\<FileName>.dat" -d YourDatabaseName -n -T -S YourSQLServerInstance

Note: if Windows authentication isn’t an option for connecting to SQL Server, use:

bcp YourTableName out "<YourFilePath>\<FileName>.dat" -d YourDatabaseName -S YourServerInstance -U YourUsername -P YourPassword -n

Replace dbo.YourTableName with the name of your large table, <YourFilePath>\<FileName>.dat with the path where you want to store the exported data, YourDatabaseName with the name of your database, and YourSQLServerInstance with your SQL Server instance name. By executing this command, the specified table’s data is exported into a .dat file, effectively reducing the load and complexity involved in the main database export process. This technique not only simplifies the handling of large datasets but also ensures that all parts of the database are backed up with integrity and are ready for a smooth transition.

When dealing with large tables that have foreign key constraints, there’s an additional step to consider. Since foreign keys enforce relationships between tables, they can complicate the export process, especially when using bcp or excluding these tables from the .bacpac file. Here’s how to manage this:

In our case, we were fortunate that our large tables didn’t have any foreign key constraints, simplifying the process. However, this may not be the case for others, so it’s important to prepare for this scenario.

After handling the large tables with the bcp command, the next step in our migration process involves exporting the remainder of the database into a .bacpac file. This file format is ideal for Azure migrations but requires a careful approach when dealing with oversized data. Here’s how to efficiently exclude the large tables during the export:

By strategically excluding large tables during the .bacpac export, we ensure the file remains manageable and tailored for Azure migration. This approach minimizes potential timeouts and errors associated with large database sizes, streamlining the migration process to Azure.

Once the .bacpac file has been successfully exported without the oversized tables, the next phase involves setting up your Azure SQL Database and integrating the large tables you managed separately. This two-step process ensures that all components of your original database are accurately recreated in the Azure environment. Here’s how to complete this integration effectively:

To deploy your .bacpac file to Azure, follow these steps, which include creating a new SQL database directly from the .bacpac file:

To deploy your .bacpac file to Azure, follow these steps, which include creating a new SQL database directly from the .bacpac file:

bcp YourTableName in "<YourFilePath>\\<FileName>.dat" -d YourAzureDatabaseName -n -T -S YourAzureServer

By carefully setting up the Azure SQL Database and methodically integrating the large tables, you can ensure a complete and functional migration that mirrors your original database environment. This meticulous approach not only secures data integrity but also enhances the overall performance and scalability of your database in the cloud.

In conclusion, migrating a project to Azure involves more than just transferring data; it requires strategic planning and careful execution, especially when dealing with large databases. By employing methods such as using bcp for large tables and efficiently managing .bacpac imports, we can overcome the limitations posed by traditional migration methods. The steps outlined in this guide not only simplify the process but also ensure that every component of the database is accurately recreated in the Azure environment, maintaining integrity and performance. As cloud technologies continue to evolve, so too do our approaches to optimizing these migrations, enabling businesses to leverage the full potential of cloud computing.

Stay up to date on what BizStream is doing and keep in the loop on the latest in marketing & technology.